Hydra-7 Status

|

You can view the list of all the available modules:

as an HTML document, or

a plain ASCII text file.

You can also check the bandwidth between SAO and HDC. You can select to have this page refreshed every 5m, 20m, or 1hr, this one will auto-refresh every 5m. |

-

Usage

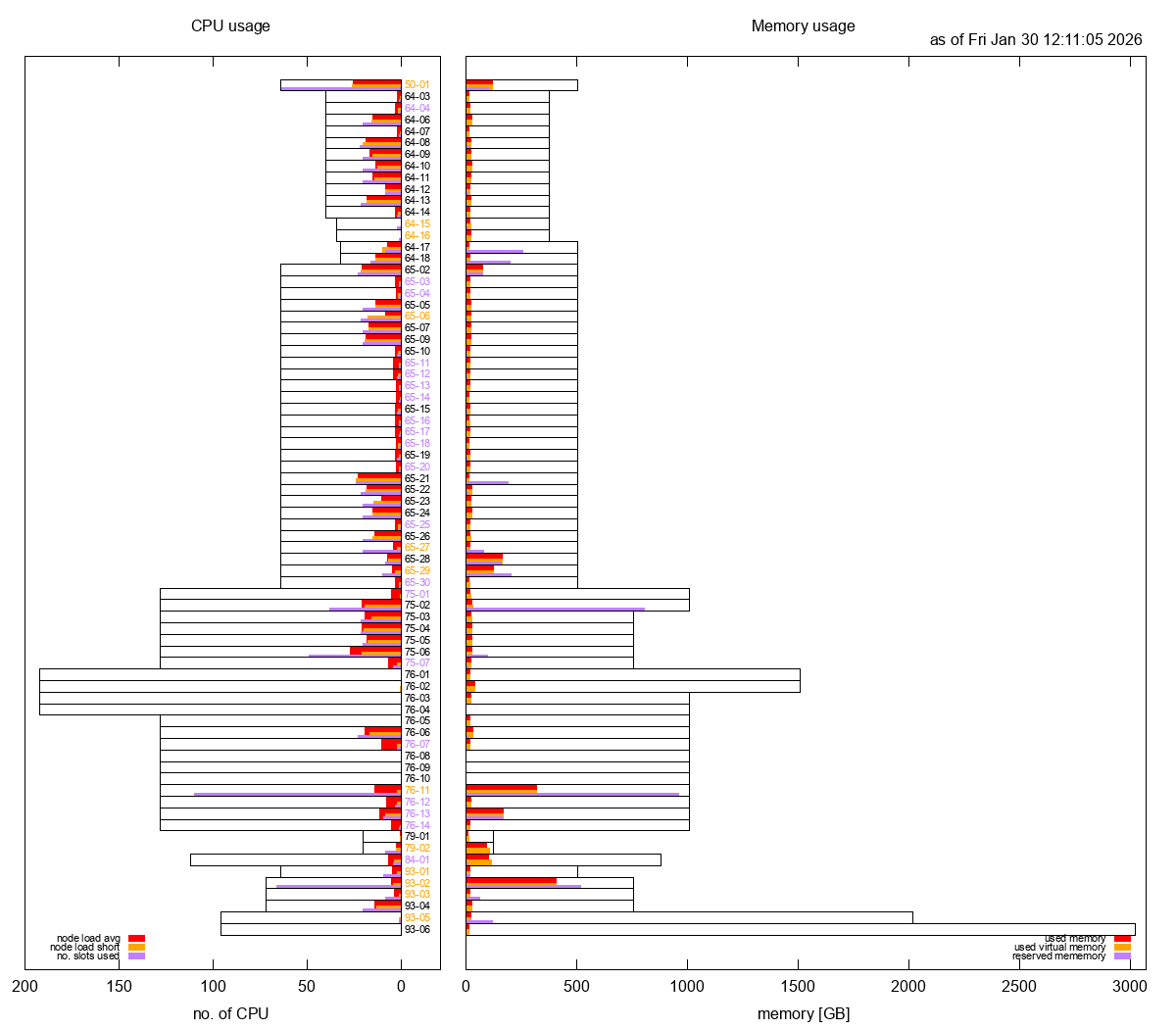

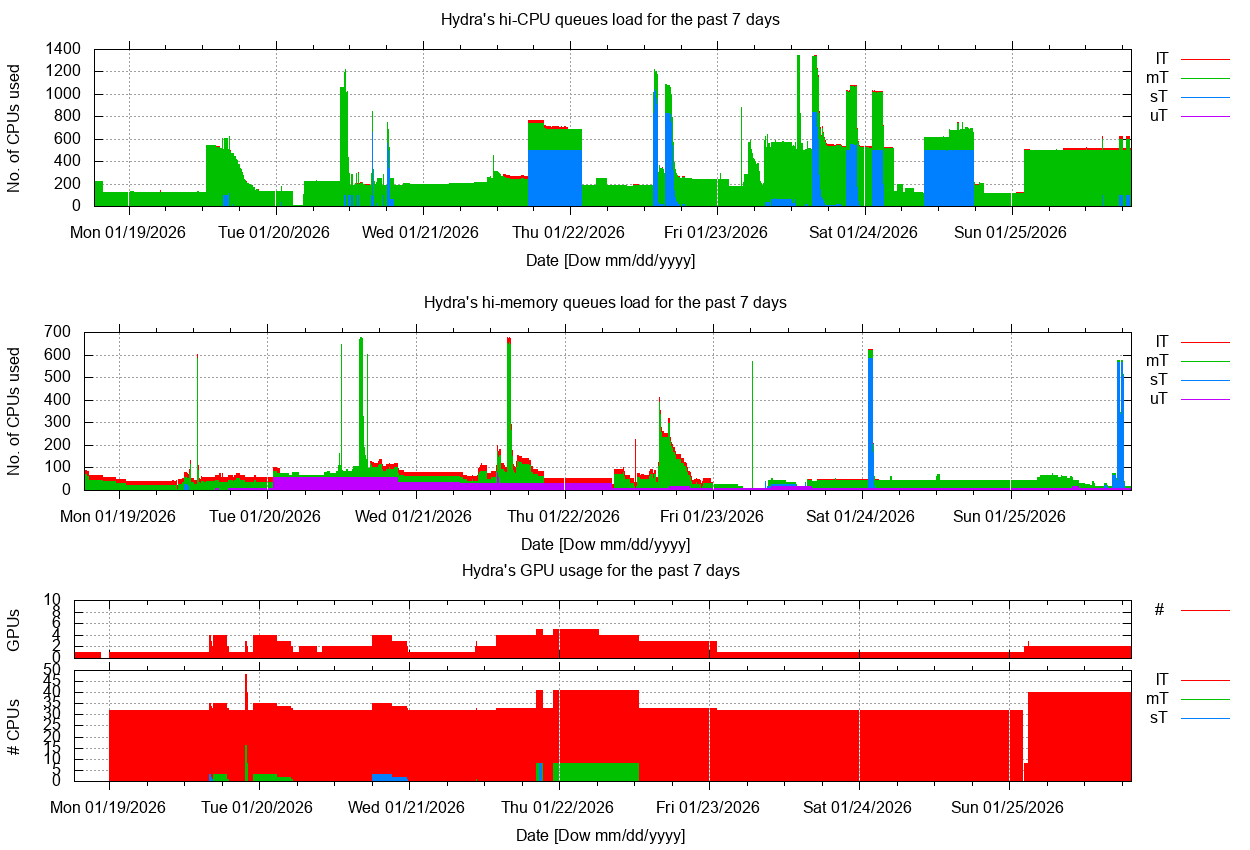

Current snapshot sorted by nodes' . Usage vs time, for length= and user= highlighted.

As of Tue May 13 18:07:03 2025: #CPUs/nodes 5636/74, 2 down.

Loads: head node: 0.80, login nodes: 0.40, 0.05, 0.08, 0.04; NSDs: 3.75, 3.81; licenses: 1 idlrt used.

Queues status: 24 disabled, 15 need attention, none in error state.

24 users with running jobs (slots/jobs):

Current load: 534.6, #running (slots/jobs): 874/83, usage: 15.5%, efficiency: 61.2%

1 user with queued jobs (jobs/tasks/slots):

vohsens=1/32/1280 Total number of queued jobs/tasks/slots: 1/32/1,280

60 users have/had running or queued jobs over the past 7 days, 73 over the past 15 days. 96 over the past 30 days.

Click on the tabs to view each section, on the plots to view larger versions.

You can view the current cluster snapshot sorted by name, no. cpu, usage, load or memory, and

view the past load for 7, or 15, or 30 days as well as highlight a given user by selecting the corresponding options in the drop down menus.

This page was last updated on Tuesday, 13-May-2025 18:11:47 EDT with mk-webpage.pl ver. 7.2/1 (Aug 2024/SGK) in 0:41. -

Warnings

Oversubscribed Jobs

As of Tue May 13 18:07:03 EDT 2025 (2 oversubscribed jobs, showing no more than 3 per user) Total running (PEs/jobs) = 874/83, 1 queued (jobs), showing only oversubscribed jobs (cpu% > 133% & age > 1h) for all users. jobID name user age nPEs cpu% queue node taskID 7801067 A7_Optuna_Optim mghahrem 03:07 1 135.5% mTgpu.q 50-01 7801102 A8_Optuna_Optim mghahrem 03:06 1 140.8% mTgpu.q 79-01 ⇒ Equivalent to 0.8 overused CPUs: 2 CPUs used at 138.2% on average.

Inefficient Jobs

As of Tue May 13 18:07:03 EDT 2025 (10 inefficient jobs, showing no more than 3 per user) Total running (PEs/jobs) = 874/83, 1 queued (jobs), showing only inefficient jobs (cpu% < 33% & age > 1h) for all users. jobID name user age nPEs cpu% queue node taskID 7719868 snp_call wangt2 18:47 50 2.0% mThC.q 76-12 7224720 newV_lognormal jassoj +31:23 40 18.0% lThC.q 64-13 7224721 newV_lognormal jassoj +31:23 40 14.5% lThC.q 65-27 7229516 newV_Flognormal jassoj +29:05 40 15.5% lThC.q 76-13 7584385 phyloflash_pool vohsens 02:45 40 24.5% sThM.q 93-06 34 7778602 rax_587 hodelr 07:14 40 2.5% mThC.q 65-24 7787153 rax_587boots hodelr 05:44 40 2.5% mThC.q 75-05 7271216 gadma_sch_1 myerse +5:05 35 26.4% mThC.q 65-10 7672647 vcf1 uribeje +1:03 8 13.4% uThM.q 65-26 7672675 vcf11 uribeje +1:03 8 13.3% uThM.q 65-19 ⇒ Equivalent to 297.6 underused CPUs: 341 CPUs used at 12.7% on average.

Nodes with Excess Load

As of Tue May 13 18:07:05 EDT 2025 (5 nodes have a high load, offset=1.5) #slots excess node #CPUs used load load ----------------------------------- 76-03 192 4 5.6 1.6 * 76-04 192 8 12.1 4.1 * 76-05 128 8 12.8 4.8 * 93-03 72 5 7.5 2.5 * 93-06 96 40 70.0 30.0 * Total excess load = 43.1

High Memory Jobs

Statistics

User nSlots memory memory vmem maxvmem ratio Name used reserved used used used [TB] resd/maxvm -------------------------------------------------------------------------------------------------- sylvain 76 35.5% 2.8633 45.1% 0.1806 28.9% 1.1840 1.2158 2.4 uribeje 36 16.8% 1.0742 16.9% 0.0341 5.5% 0.0748 0.0762 14.1 bourkeb 8 3.7% 0.9688 15.3% 0.0724 11.6% 0.0652 0.2631 3.7 figueiroh 40 18.7% 0.6250 9.8% 0.1576 25.2% 0.1574 0.1591 3.9 vohsens 40 18.7% 0.3906 6.2% 0.0188 3.0% 0.0315 0.0315 12.4 liy 1 0.5% 0.2930 4.6% 0.1561 25.0% 0.2011 0.2431 1.2 morrisseyd 12 5.6% 0.1172 1.8% 0.0026 0.4% 0.0027 0.0027 44.0 lealc 1 0.5% 0.0156 0.2% 0.0028 0.4% 0.0030 0.0030 5.1 ================================================================================================== Total 214 6.3477 0.6250 1.7196 1.9946 3.2

Warnings

17 high memory jobs produced a warning:

1 for bourkeb 1 for figueiroh 1 for lealc 10 for sylvain 3 for uribeje 1 for vohsensDetails for each job can be found here.

-

Breakdown by Queue

Select length:

Current Usage by Queue

Total Limit Fill factor Efficiency sThC.q=0 mThC.q=460 lThC.q=162 uThC.q=17 639 4952 12.9% 68.6% sThM.q=40 mThM.q=145 lThM.q=12 uThM.q=17 214 4616 4.6% 197.9% sTgpu.q=0 mTgpu.q=2 lTgpu.q=0 qgpu.iq=0 2 104 1.9% 141.0% uTxlM.rq=0 0 536 0.0% lThMuVM.tq=0 0 384 0.0% lTb2g.q=0 0 2 0.0% lTIO.sq=0 0 8 0.0% lTWFM.sq=0 0 4 0.0% qrsh.iq=19 19 68 27.9% 0.4% Total: 874

-

Avail Slots/Wait Job(s)

Available Slots

As of Tue May 13 18:07:04 EDT 2025 0 avail(slots), free(load)=0.0, unresd(mem)=0.0G, for hgrp=@hicpu-hosts and minMem=1.0G/slot total(nCPU) 0 total(mem) 37.6T unused(slots) -781 unused(load) -5.8 ie: 0.0% 0.0% unreserved(mem) 29.6T unused(mem) 33.7T ie: 78.6% 89.7%

0 avail(slots), free(load)=0.0, unresd(mem)=0.0G, for hgrp=@himem-hosts and minMem=1.0G/slot total(nCPU) 0 total(mem) 35.3T unused(slots) -729 unused(load) -5.5 ie: 0.0% 0.0% unreserved(mem) 27.1T unused(mem) 31.6T ie: 76.9% 89.6%

0 avail(slots), free(load)=0.0, unresd(mem)=0.0G, for hgrp=@xlmem-hosts and minMem=1.0G/slot total(nCPU) 0 total(mem) 7.9T unused(slots) -40 unused(load) -0.7 ie: 0.0% 0.0% unreserved(mem) 7.5T unused(mem) 7.7T ie: 95.0% 98.1%

102 avail(slots), free(load)=103.9, unresd(mem)=750.2G, for hgrp=@gpu-hosts and minMem=1.0G/slot total(nCPU) 104 total(mem) 0.7T unused(slots) 102 unused(load) 103.9 ie: 98.1% 99.9% unreserved(mem) 0.7T unused(mem) 0.7T ie: 99.4% 90.1% unreserved(mem) 7.4G unused(mem) 6.7G per unused(slots)

GPU Usage

Tue May 13 18:07:09 EDT 2025 hostgroup: @gpu-hosts (3 hosts) - --- memory (GB) ---- - #GPU - --------- slots/CPUs --------- hostname - total used resd - a/u - nCPU used load - free unused compute-50-01 - 503.3 35.5 467.8 - 4/2 - 64 1 1.3 - 63 62.7 compute-79-01 - 125.5 24.4 101.1 - 2/1 - 20 1 1.4 - 19 18.6 compute-79-02 - 125.5 14.4 111.1 - 2/0 - 20 0 0.1 - 20 19.9 Total #GPU=8 used=3 (37.5%)

Waiting Job(s)

As of Tue May 13 18:07:04 EDT 2025 1 job waiting for vohsens: jobID jobName user age nPEs memReqd queue taskID --------- --------------- ---------------- ------ ---- -------- ------ ------- 7584385 phyloflash_pool vohsens +1:19 40 400.0 sThM.q 35-66:1 quota rule resource=value/limit %used ------------------- ------------------------------- ------ max_slots_per_user/1 slots=40/840 4.8% for vohsens max_hM_slots_per_user/1 slots=40/840 4.8% for vohsens in queue sThM.q max_mem_res_per_user/2 mem_res=400.0G/8.944T 4.4% for vohsens in queue uThM.q ------------------- ------------------------------- ------Overall Quota Usage

quota rule resource=value/limit %used ------------------- ------------------------------- ------ total_gpus/1 num_gpu=3/8 37.5% for * in queue mTgpu.q total_mem_res/2 mem_res=6.352T/35.78T 17.8% for * in queue uThM.q total_slots/1 slots=877/5960 14.7% for * total_mem_res/1 mem_res=2.482T/39.94T 6.2% for * in queue uThC.q blast2GO/1 slots=4/110 3.6% for *

-

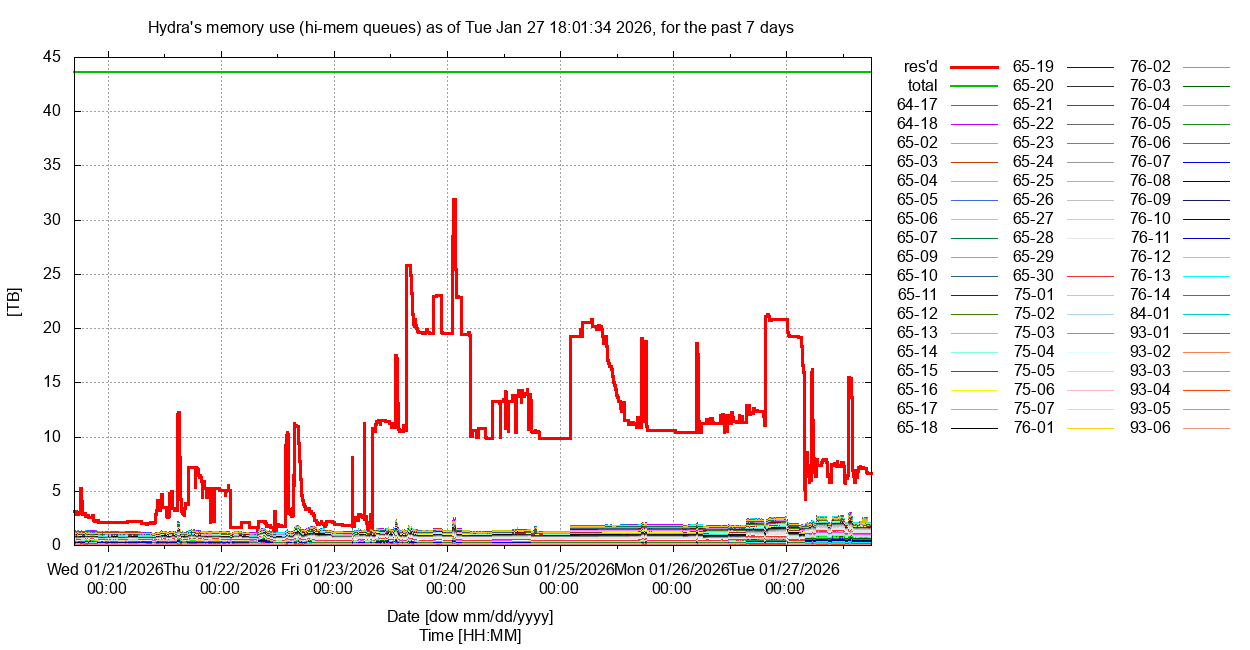

Memory Usage

Reserved Memory, All High-Memory Queues

Select length:

Current Memory Quota Usage

As of Tue May 13 18:07:05 EDT 2025 quota rule resource=value/limit %used filter --------------------------------------------------------------------------------------------------- total_mem_res/1 mem_res=2.482T/39.94T 6.2% for * in queue uThC.q total_mem_res/2 mem_res=6.352T/35.78T 17.8% for * in queue uThM.q

Current Memory Usage by Compute Node, High Memory Nodes Only

hostgroup: @himem-hosts (54 hosts) - ----------- memory (GB) ------------ - --------- slots/CPUs --------- hostname - avail used resd - unused unresd - nCPU used load - free unused compute-64-17 - 503.3 67.7 300.0 - 435.6 203.3 - 32 20 20.4 - 12 11.6 compute-64-18 - 503.3 33.1 120.0 - 470.2 383.3 - 32 12 3.8 - 20 28.2 compute-65-02 - 503.5 91.9 112.0 - 411.6 391.5 - 64 8 8.0 - 56 56.0 compute-65-03 - 503.5 71.9 194.0 - 431.6 309.5 - 64 56 52.7 - 8 11.3 compute-65-04 - 503.5 41.5 4.0 - 462.0 499.5 - 64 2 2.0 - 62 62.0 compute-65-05 - 503.5 38.3 16.0 - 465.2 487.5 - 64 1 1.1 - 63 62.9 compute-65-06 - 503.5 39.1 20.0 - 464.4 483.5 - 64 4 3.5 - 60 60.5 compute-65-07 - 503.5 43.4 2.0 - 460.1 501.5 - 64 1 1.1 - 63 62.9 compute-65-09 - 503.5 66.5 128.0 - 437.0 375.5 - 64 16 7.4 - 48 56.6 compute-65-10 - 503.5 53.6 280.0 - 449.9 223.5 - 64 35 2.2 - 29 61.8 compute-65-11 - 503.5 37.0 8.0 - 466.5 495.5 - 64 1 1.1 - 63 62.9 compute-65-12 - 503.5 32.5 20.0 - 471.0 483.5 - 64 4 3.3 - 60 60.7 compute-65-13 - 503.5 35.2 2.0 - 468.3 501.5 - 64 1 1.0 - 63 63.0 compute-65-14 - 503.5 35.8 20.0 - 467.7 483.5 - 64 4 3.2 - 60 60.8 compute-65-15 - 503.5 38.5 2.0 - 465.0 501.5 - 64 50 36.3 - 14 27.7 compute-65-16 - 503.5 58.1 128.0 - 445.4 375.5 - 64 16 7.4 - 48 56.6 compute-65-17 - 503.5 120.7 432.0 - 382.8 71.5 - 64 8 8.0 - 56 56.0 compute-65-18 - 503.5 92.7 112.0 - 410.8 391.5 - 64 8 8.2 - 56 55.8 compute-65-19 - 503.5 37.2 400.0 - 466.3 103.5 - 64 8 1.2 - 56 62.8 compute-65-21 - 503.5 38.7 20.0 - 464.8 483.5 - 64 4 4.0 - 60 60.0 compute-65-22 - 503.5 35.0 20.0 - 468.5 483.5 - 64 4 3.7 - 60 60.3 compute-65-23 - 503.5 34.2 8.0 - 469.3 495.5 - 64 8 8.2 - 56 55.8 compute-65-24 - 503.5 44.7 40.0 - 458.8 463.5 - 64 40 1.1 - 24 62.9 compute-65-25 - 503.5 34.1 20.0 - 469.4 483.5 - 64 4 3.8 - 60 60.2 compute-65-26 - 503.5 35.3 400.0 - 468.2 103.5 - 64 8 1.1 - 56 63.0 compute-65-27 - 503.5 38.6 60.0 - 464.9 443.5 - 64 40 5.0 - 24 59.0 compute-65-28 - 503.5 52.9 128.0 - 450.6 375.5 - 64 16 6.8 - 48 57.2 compute-65-29 - 503.5 36.8 20.0 - 466.7 483.5 - 64 4 3.4 - 60 60.6 compute-65-30 - 503.5 37.1 20.0 - 466.4 483.5 - 64 4 4.1 - 60 59.9 compute-75-01 - 1007.4 57.5 128.0 - 949.9 879.4 - 128 16 9.4 - 112 118.6 compute-75-02 - 1007.5 104.9 992.0 - 902.6 15.5 - 128 8 8.0 - 120 120.0 compute-75-03 - 755.5 35.2 2.0 - 720.3 753.5 - 128 8 8.0 - 120 120.0 compute-75-04 - 755.5 38.5 2.0 - 717.0 753.5 - 128 8 8.1 - 120 119.9 compute-75-05 - 755.5 42.2 40.0 - 713.3 715.5 - 128 40 1.1 - 88 126.9 compute-75-06 - 755.5 189.3 640.0 - 566.2 115.5 - 128 40 34.8 - 88 93.2 compute-75-07 - 755.5 98.5 112.0 - 657.0 643.5 - 128 8 8.1 - 120 119.9 compute-76-03 - 1007.4 40.2 20.5 - 967.2 986.9 - 128 4 3.8 - 124 124.2 compute-76-04 - 1007.4 132.8 432.0 - 874.6 575.4 - 128 8 8.1 - 120 119.9 compute-76-05 - 1007.4 42.4 32.0 - 965.0 975.4 - 128 8 12.8 - 120 115.2 compute-76-06 - 1007.4 53.0 2.0 - 954.4 1005.4 - 128 1 1.1 - 127 126.9 compute-76-07 - 1007.4 148.9 432.0 - 858.5 575.4 - 128 8 8.3 - 120 119.7 compute-76-08 - 1007.4 239.0 300.0 - 768.4 707.4 - 128 1 1.5 - 127 126.5 compute-76-09 - 1007.4 47.6 20.0 - 959.8 987.4 - 128 4 3.8 - 124 124.2 compute-76-10 - 1007.4 146.8 432.0 - 860.6 575.4 - 128 8 8.1 - 120 119.9 compute-76-11 - 1007.4 148.9 432.0 - 858.5 575.4 - 128 8 8.5 - 120 119.5 compute-76-12 - 1007.4 66.8 594.0 - 940.6 413.4 - 128 79 29.3 - 49 98.7 compute-76-13 - 1007.4 47.2 60.0 - 960.2 947.4 - 128 40 7.0 - 88 121.0 compute-76-14 - 1007.4 76.0 130.0 - 931.4 877.4 - 128 17 9.1 - 111 118.9 compute-84-01 - 881.1 327.6 20.0 - 553.5 861.1 - 112 4 3.9 - 108 108.1 compute-93-01 - 503.8 31.8 18.0 - 472.0 485.8 - 64 9 10.3 - 55 53.7 compute-93-02 - 755.6 122.7 432.0 - 632.9 323.6 - 72 8 8.4 - 64 63.6 compute-93-03 - 755.6 40.5 22.0 - 715.1 733.6 - 72 5 7.5 - 67 64.5 compute-93-04 - 755.6 39.6 4.0 - 716.0 751.6 - 72 2 2.5 - 70 69.5 ======= ===== ====== ==== ==== ===== Totals 36134.0 3740.0 8334.5 4616 729 424.4 ==> 10.4% 23.1% ==> 15.8% 9.2% Most unreserved/unused memory (1005.4/954.4GB) is on compute-76-06 with 127/126.9 slots/CPUs free/unused. hostgroup: @xlmem-hosts (4 hosts) - ----------- memory (GB) ------------ - --------- slots/CPUs --------- hostname - avail used resd - unused unresd - nCPU used load - free unused compute-76-01 - 1511.4 35.6 -0.0 - 1475.8 1511.4 - 192 0 0.1 - 192 191.9 compute-76-02 - 1511.4 34.7 -0.0 - 1476.7 1511.4 - 192 0 0.1 - 192 191.9 compute-93-05 - 2016.3 36.5 0.0 - 1979.8 2016.3 - 96 0 0.0 - 96 96.0 compute-93-06 - 3023.9 50.0 400.4 - 2973.9 2623.5 - 56 40 40.8 - 16 15.2 ======= ===== ====== ==== ==== ===== Totals 8063.0 156.8 400.4 536 40 41.1 ==> 1.9% 5.0% ==> 7.5% 7.7% Most unreserved/unused memory (2623.5/2973.9GB) is on compute-93-06 with 16/15.2 slots/CPUs free/unused.

Past Memory Usage vs Memory Reservation

Past memory use in hi-mem queues between 04/30/25 and 05/07/25 queues: ?ThM.q ----------- total --------- -------------------- mean -------------------- user no. of elapsed time eff. reserved maxvmem average ratio name jobs/slots [d] [%] [GB] [GB] [GB] resd/maxvmem --------------- -------------- ------------ ----- --------- -------- --------- ------------ steierj 2/20 0.00 5.3 160.0 0.0 0.0 0.0 ariasc 1/20 0.00 121.1 600.0 0.0 0.0 0.0 sandovalarangos 2/16 0.00 61.6 32.0 58.4 2.7 0.5 blackburnrc 1/20 0.00 71.2 1099.8 495.8 23.6 2.2 sossajef 2/2 0.01 99.6 11.1 0.2 0.1 51.4 > 2.5 henriquemartinsp 636/6360 0.01 5.7 300.0 0.0 0.0 12289.9 > 2.5 jkim 6/1152 0.02 12.1 200.0 739.6 3.3 0.3 jassoj 2/2 0.02 446.1 128.0 136.9 136.6 0.9 macguigand 16/92 0.09 62.5 135.2 34.6 1.0 3.9 > 2.5 campanam 9/198 0.13 71.1 175.1 80.0 3.9 2.2 palmerem 190/190 0.23 68.0 215.6 6.4 5.1 33.5 > 2.5 radicev 23/59 0.27 63.5 451.7 187.4 57.5 2.4 hydem2 224/949 0.31 27.8 24.5 11.7 5.4 2.1 beckerm 4/32 1.18 89.6 80.4 14.6 14.2 5.5 > 2.5 collinsa 259/2072 1.23 12.3 96.0 34.3 0.3 2.8 > 2.5 zhangy 15/60 1.87 32.4 50.0 10.2 4.1 4.9 > 2.5 byerlyp 11/182 2.17 22.2 43.8 17.9 10.8 2.5 quattrinia 7404/7404 2.28 69.6 16.0 1.2 0.7 13.8 > 2.5 bayarkhangaia 11/260 2.29 32.7 889.9 486.5 38.7 1.8 horowitzj 2183/2183 3.74 94.3 16.0 2.5 1.4 6.3 > 2.5 uribeje 30/364 3.82 69.9 246.5 12.0 6.6 20.5 > 2.5 holmk 20/40 5.20 118.9 400.0 304.6 304.4 1.3 castanedaricos 17/253 5.31 15.1 376.4 118.7 26.9 3.2 > 2.5 macdonaldk 54/414 6.18 80.5 158.9 99.0 47.8 1.6 bourkeb 50/451 7.08 77.4 409.0 15.6 2.6 26.2 > 2.5 akelbert 33/70 7.92 49.9 160.5 107.6 87.7 1.5 figueiroh 3397/13588 8.05 21.5 64.0 32.6 32.2 2.0 morrisseyd 12132/12132 18.40 23.7 16.0 0.9 0.4 18.6 > 2.5 yancos 975/975 21.40 98.5 98.6 8.7 7.5 11.4 > 2.5 pappalardop 491/491 25.93 99.8 290.6 1.2 1.1 247.2 > 2.5 dikowr 71/568 54.50 83.0 400.0 215.9 80.4 1.9 --------------- -------------- ------------ ----- --------- -------- --------- ------------ all 28271/50619 179.66 73.0 251.5 97.0 43.2 2.6 > 2.5 --- queues: ?TxlM.rq ----------- total --------- -------------------- mean -------------------- user no. of elapsed time eff. reserved maxvmem average ratio name jobs/slots [d] [%] [GB] [GB] [GB] resd/maxvmem --------------- -------------- ------------ ----- --------- -------- --------- ------------ --------------- -------------- ------------ ----- --------- -------- --------- ------------ all 0/0 0.00

-

Resource Limits

Limit slots for all users together users * to slots=5960 users * queues sThC.q,lThC.q,mThC.q,uThC.q to slots=5176 users * queues sThM.q,mThM.q,lThM.q,uThM.q to slots=4680 users * queues uTxlM.rq to slots=536 Limit slots/user for all queues users {*} to slots=840 Limit slots/user in hiCPU queues users {*} queues {sThC.q} to slots=840 users {*} queues {mThC.q} to slots=840 users {*} queues {lThC.q} to slots=431 users {*} queues {uThC.q} to slots=143 Limit slots/user for hiMem queues users {*} queues {sThM.q} to slots=840 users {*} queues {mThM.q} to slots=585 users {*} queues {lThM.q} to slots=390 users {*} queues {uThM.q} to slots=73 Limit slots/user for xlMem restricted queue users {*} queues {uTxlM.rq} to slots=536 Limit total reserved memory for all users per queue type users * queues sThC.q,mThC.q,lThC.q,uThC.q to mem_res=40902G users * queues sThM.q,mThM.q,lThM.q,uThM.q to mem_res=36637G users * queues uTxlM.rq to mem_res=8063G Limit reserved memory per user for specific queues users {*} queues sThC.q,mThC.q,lThC.q,uThC.q to mem_res=10225G users {*} queues sThM.q,mThM.q,lThM.q,uThM.q to mem_res=9159G users {*} queues uTxlM.rq to mem_res=8063G Limit slots/user for interactive (qrsh) queues users {*} queues {qrsh.iq} to slots=16 Limit GPUs for all users in GPU queues to the avail no of GPUs users * queues {sTgpu.q,mTgpu.q,lTgpu.q,qgpu.iq} to num_gpu=8 Limit GPUs per user in all the GPU queues users {*} queues sTgpu.q,mTgpu.q,lTgpu.q,qgpu.iq to num_gpu=4 Limit GPUs per user in each GPU queues users {*} queues {sTgpu.q} to num_gpu=4 users {*} queues {mTgpu.q} to num_gpu=3 users {*} queues {lTgpu.q} to num_gpu=2 users {*} queues {qgpu.iq} to num_gpu=1 Limit to set aside a slot for blast2GO users * queues !lTb2g.q hosts {@b2g-hosts} to slots=110 users * queues lTb2g.q hosts {@b2g-hosts} to slots=1 users {*} queues lTb2g.q hosts {@b2g-hosts} to slots=1 Limit total bigtmp concurrent request per user users {*} to big_tmp=25 Limit total number of idl licenses per user users {*} to idlrt_license=102 Limit slots for io queue per user users {*} queues {lTIO.sq} to slots=8 Limit slots for io queue per user users {*} queues {lTWFM.sq} to slots=2 Limit the number of concurrent jobs per user for some queues users {*} queues {uTxlM.rq} to no_concurrent_jobs=3 users {*} queues {lTIO.sq} to no_concurrent_jobs=2 users {*} queues {lWFM.sq} to no_concurrent_jobs=1 users {*} queues {qrsh.iq} to no_concurrent_jobs=4 users {*} queues {qgpu.iq} to no_concurrent_jobs=1

-

Disk Usage & Quota

As of Tue May 13 17:06:02 EDT 2025

Disk Usage

Filesystem Size Used Avail Capacity Mounted on netapp-fas83:/vol_home 22.05T 17.93T 4.12T 82%/12% /home netapp-fas83-n02:/vol_data_public 142.50T 48.26T 94.24T 34%/3% /data/public netapp-fas83-n02:/vol_pool_public 230.00T 84.32T 145.68T 37%/1% /pool/public gpfs01:public 400.00T 337.42T 62.58T 85%/51% /scratch/public netapp-fas83-n02:/vol_pool_kozakk 11.00T 10.72T 285.32G 98%/1% /pool/kozakk netapp-fas83-n02:/vol_pool_nmnh_ggi 21.00T 13.80T 7.20T 66%/1% /pool/nmnh_ggi netapp-fas83-n02:/vol_pool_sao_access 19.95T 5.49T 14.46T 28%/2% /pool/sao_access netapp-fas83-n02:/vol_pool_sao_rtdc 10.45T 907.44G 9.56T 9%/1% /pool/sao_rtdc netapp-fas83-n02:/vol_pool_sylvain 30.00T 24.25T 5.75T 81%/6% /pool/sylvain gpfs01:nmnh_bradys 25.00T 24.73T 275.34G 99%/42% /scratch/bradys gpfs01:nmnh_kistlerl 120.00T 109.12T 10.88T 91%/6% /scratch/kistlerl gpfs01:nmnh_meyerc 25.00T 23.96T 1.04T 96%/4% /scratch/meyerc gpfs01:nmnh_quattrinia 60.00T 42.40T 17.60T 71%/7% /scratch/nmnh_corals gpfs01:nmnh_ggi 77.00T 21.98T 55.02T 29%/5% /scratch/nmnh_ggi gpfs01:nmnh_lab 25.00T 9.02T 15.98T 37%/3% /scratch/nmnh_lab gpfs01:nmnh_mammals 35.00T 18.15T 16.85T 52%/21% /scratch/nmnh_mammals gpfs01:nmnh_mdbc 50.00T 37.00T 13.00T 75%/9% /scratch/nmnh_mdbc gpfs01:nmnh_ocean_dna 40.00T 10.51T 29.49T 27%/1% /scratch/nmnh_ocean_dna gpfs01:nzp_ccg 45.00T 31.32T 13.68T 70%/2% /scratch/nzp_ccg gpfs01:sao_atmos 350.00T 270.95T 79.05T 78%/4% /scratch/sao_atmos gpfs01:sao_cga 25.00T 9.50T 15.50T 38%/6% /scratch/sao_cga gpfs01:sao_tess 50.00T 24.82T 25.18T 50%/83% /scratch/sao_tess gpfs01:scbi_gis 80.00T 33.39T 46.61T 42%/35% /scratch/scbi_gis gpfs01:nmnh_schultzt 25.00T 19.19T 5.81T 77%/75% /scratch/schultzt gpfs01:serc_cdelab 15.00T 9.81T 5.19T 66%/4% /scratch/serc_cdelab gpfs01:stri_ap 25.00T 18.96T 6.04T 76%/1% /scratch/stri_ap gpfs01:sao_sylvain 70.00T 47.79T 22.21T 69%/47% /scratch/sylvain gpfs01:usda_sel 25.00T 5.48T 19.52T 22%/6% /scratch/usda_sel gpfs01:wrbu 50.00T 38.65T 11.35T 78%/6% /scratch/wrbu netapp-fas83-n01:/vol_data_admin 4.75T 52.46G 4.70T 2%/1% /data/admin netapp-fas83-n01:/vol_pool_admin 47.50T 35.28T 12.22T 75%/1% /pool/admin gpfs01:admin 20.00T 3.60T 16.40T 18%/31% /scratch/admin gpfs01:bioinformatics_dbs 10.00T 5.00T 5.00T 50%/2% /scratch/dbs gpfs01:tmp 100.00T 38.33T 61.67T 39%/9% /scratch/tmp gpfs01:ocio_dpo 10.00T 0.00G 10.00T 1%/1% /scratch/ocio_dpo gpfs01:ocio_ids 5.00T 0.00G 5.00T 0%/1% /scratch/ocio_ids nas1:/mnt/pool/admin 20.00T 7.92T 12.08T 40%/1% /store/admin nas1:/mnt/pool/public 175.00T 84.74T 90.26T 49%/1% /store/public nas1:/mnt/pool/nmnh_bradys 40.00T 8.11T 31.89T 21%/1% /store/bradys nas2:/mnt/pool/n1p3/nmnh_ggi 90.00T 36.28T 53.72T 41%/1% /store/nmnh_ggi nas2:/mnt/pool/nmnh_lab 40.00T 12.52T 27.48T 32%/1% /store/nmnh_lab nas2:/mnt/pool/nmnh_ocean_dna 40.00T 973.76G 39.05T 3%/1% /store/nmnh_ocean_dna nas1:/mnt/pool/nzp_ccg 221.95T 109.08T 112.87T 50%/1% /store/nzp_ccg nas2:/mnt/pool/n1p2/ocio_dpo 50.00T 2.93T 47.07T 6%/1% /store/ocio_dpo nas2:/mnt/pool/n1p1/sao_atmos 750.00T 477.08T 272.92T 64%/1% /store/sao_atmos nas2:/mnt/pool/n1p2/nmnh_schultzt 40.00T 27.07T 12.93T 68%/1% /store/schultzt nas1:/mnt/pool/sao_sylvain 50.00T 8.41T 41.59T 17%/1% /store/sylvain nas1:/mnt/pool/wrbu 80.00T 10.02T 69.98T 13%/1% /store/wrbu qnas:/hydra 45.47T 29.07T 16.40T 64%/64% /qnas/hydra qnas:/nfs-mesa-nanozoomer 386.54T 340.14T 46.39T 88%/88% /qnas/mesa qnas:/sil 3840.36T 2868.95T 971.41T 75%/75% /qnas/sil

You can view plots of disk use vs time, for the past 7, 30, or 120 days; as well as plots of disk usage by user, or by device (for the past 90 or 240 days respectively).Notes

Capacity shows % disk space full and % of inodes used.

When too many small files are written on a disk, the file system can become full because it is unable to keep track of new files.

The % of inodes should be lower or comparable to the % of disk space used.

If it is much larger, the disk can become unusable before it gets full.

You can view the plots of the GPFS IB traffic for the past 1 , 7 or 30 days, and throughput info.

Disk Quota Report

Volume=NetApp:vol_data_public, mounted as /data/public -- disk -- -- #files -- default quota: 4.50TB/10.0M Disk usage %quota usage %quota name, affiliation - username (indiv. quota) -------------------- ------- ------ ------ ------ ------------------------------------------- /data/public 4.17TB 92.7% 5.07M 50.7% Alicia Talavera, NMNH - talaveraa Volume=NetApp:vol_home, mounted as /home -- disk -- -- #files -- default quota: 512.0GB/10.0M Disk usage %quota usage %quota name, affiliation - username (indiv. quota) -------------------- ------- ------ ------ ------ ------------------------------------------- /home 509.0GB 99.4% 0.29M 2.9% *** Paul Cristofari, SAO/SSP - pcristof /home 497.1GB 97.1% 0.12M 1.2% *** Jaiden Edelman, SAO/SSP - jedelman /home 484.5GB 94.6% 0.42M 4.2% Adela Roa-Varon, NMNH - roa-varona /home 478.6GB 93.5% 0.24M 2.4% Michael Connelly, NMNH - connellym /home 476.5GB 93.1% 3.30M 33.0% Heesung Chong, SAO/AMP - hchong /home 443.6GB 86.6% 0.97M 9.7% Hyeong-Ahn Kwon, SAO/AMP - hkwon Volume=NetApp:vol_pool_nmnh_ggi, mounted as /pool/nmnh_ggi -- disk -- -- #files -- default quota: 16.00TB/39.0M Disk usage %quota usage %quota name, affiliation - username (indiv. quota) -------------------- ------- ------ ------ ------ ------------------------------------------- /pool/nmnh_ggi 13.76TB 86.0% 6.08M 15.6% Vanessa Gonzalez, NMNH/LAB - gonzalezv Volume=NetApp:vol_pool_public, mounted as /pool/public -- disk -- -- #files -- default quota: 7.50TB/18.0M Disk usage %quota usage %quota name, affiliation - username (indiv. quota) -------------------- ------- ------ ------ ------ ------------------------------------------- /pool/public 6.72TB 89.6% 13.51M 75.0% Ting Wang, NMNH - wangt2 /pool/public 6.65TB 88.7% 0.24M 1.3% Xiaoyan Xie, SAO/HEA - xxie Volume=GPFS:scratch_public, mounted as /scratch/public -- disk -- -- #files -- default quota: 15.00TB/38.8M Disk usage %quota usage %quota name, affiliation - username (indiv. quota) -------------------- ------- ------ ------ ------ ------------------------------------------- /scratch/public 13.40TB 89.3% 14.03M 36.2% Brian Bourke, WRBU - bourkeb /scratch/public 13.30TB 88.7% 0.43M 1.1% Matthew Girard, NMNH - girardmg /scratch/public 13.20TB 88.0% 0.87M 2.2% Karen Holm, SMSC - holmk Volume=GPFS:scratch_stri_ap, mounted as /scratch/stri_ap -- disk -- -- #files -- default quota: 5.00TB/12.6M Disk usage %quota usage %quota name, affiliation - username (indiv. quota) -------------------- ------- ------ ------ ------ ------------------------------------------- /scratch/stri_ap 14.60TB 97.3% 0.05M 0.4% *** Carlos Arias, STRI - ariasc (15.0TB/12M) Volume=NAS:store_public, mounted as /store/public -- disk -- -- #files -- default quota: 0.0MB/0.0M Disk usage %quota usage %quota name, affiliation - username (indiv. quota) -------------------- ------- ------ ------ ------ ------------------------------------------- /store/public 4.80TB 96.1% - - *** Madeline Bursell, OCIO - bursellm (5.0TB/0M) /store/public 4.51TB 90.1% - - Alicia Talavera, NMNH - talaveraa (5.0TB/0M) /store/public 4.39TB 87.8% - - Mirian Tsuchiya, NMNH/Botany - tsuchiyam (5.0TB/0M)

SSD Usage

Node -------------------------- /ssd ------------------------------- Name Size Used Avail Use% | Resd Avail Resd% | Resd/Used 50-01 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 64-17 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 64-18 3.49T 24.6G 3.47T 0.7% | 0.0G 3.49T 0.0% | 0.00 65-02 3.49T 24.6G 3.47T 0.7% | 0.0G 3.49T 0.0% | 0.00 65-03 3.49T 24.6G 3.47T 0.7% | 0.0G 3.49T 0.0% | 0.00 65-04 3.49T 24.6G 3.47T 0.7% | 0.0G 3.49T 0.0% | 0.00 65-05 3.49T 24.6G 3.47T 0.7% | 0.0G 3.49T 0.0% | 0.00 65-06 3.49T 24.6G 3.47T 0.7% | 0.0G 3.49T 0.0% | 0.00 65-09 3.49T 24.6G 3.47T 0.7% | 0.0G 3.49T 0.0% | 0.00 65-10 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-11 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-12 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-13 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-14 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-15 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-16 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-17 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-18 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-19 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-20 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-21 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-22 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-23 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-24 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-25 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-26 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-27 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-28 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-29 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 65-30 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 75-02 6.98T 50.2G 6.93T 0.7% | 1.50T 5.48T 21.5% | 30.61 75-03 6.98T 50.2G 6.93T 0.7% | 0.0G 6.98T 0.0% | 0.00 75-04 6.98T 50.2G 6.93T 0.7% | 0.0G 6.98T 0.0% | 0.00 75-05 6.98T 50.2G 6.93T 0.7% | 0.0G 6.98T 0.0% | 0.00 75-06 6.98T 50.2G 6.93T 0.7% | 0.0G 6.98T 0.0% | 0.00 75-07 6.98T 50.2G 6.93T 0.7% | 0.0G 6.98T 0.0% | 0.00 76-03 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 76-04 1.75T 12.3G 1.73T 0.7% | 0.0G 1.75T 0.0% | 0.00 76-13 1.75T 31.7G 1.71T 1.8% | 0.0G 1.75T 0.0% | 0.00 79-01 7.28T 51.2G 7.22T 0.7% | 0.0G 7.28T 0.0% | 0.00 79-02 7.28T 51.2G 7.22T 0.7% | 0.0G 7.28T 0.0% | 0.00 93-05 6.98T 50.2G 6.93T 0.7% | 0.0G 6.98T 0.0% | 0.00 --------------------------------------------------------------- Total 133.2T 964.6G 132.3T 0.7% | 1.50T 131.7T 1.1% | 1.59

Note: the disk usage and the quota report are compiled 4x/day, the SSD usage is updated every 10m.