Research:

Given a random star in the sky, how old is it?

The age of a star determines many aspects of its nature (size, temperture, internal structure). However, unlike many other stellar properties, a star's age is a fundamental property that cannot be directly measurable by observations. Only one star, the Sun, has an age that is both precisely and accurately measured, and only because we have the luxury of detailed laboratory studies of Solar System meteorites. For all other stars, we must rely on inferred or estimated ages derived using age-dependent empirical trends or theoretical stellar evolution models. Nevertheless, the ability to derive precise stellar ages is at the core of our picture of stellar evolution and thus is a crucial piece of information that provides the physical understanding of many astrophysical paradigms. For example, knowledge of the ages of stars in young star forming regions is needed to tell us the timescale on which star-forming disks dissipate and thus the maximum time for planets to form around their host stars (e.g., Formation and Evolution of Planetary Systems (FEPS): Primordial Warm Dust Evolution from 3 to 30 Myr around Sun-like Stars; Silverstone et al. 2006). And on much larger scales, stellar chronometery has been used to give a lower limit for the age of our Universe (Age Estimates of Globular Clusters in the Milky Way: Constraints on Cosmology; Krauss & Chaboyer 2003).

With this in mind, I am leading a 3-year NSF funded program to evaluate our best techniques (most accurate, least uncertainity, easiliest to apply) to determine the ages of stars. Titled Triangulating on the Ages of Star: Using Open Clusters to Calibrate Stellar Chronometers from Myr to Gyr Ages , this research program will provide critical empirical constraints, using open clusters as our stellar labratories, on the four most common stellar chronometers (or age-dating techniques):

-

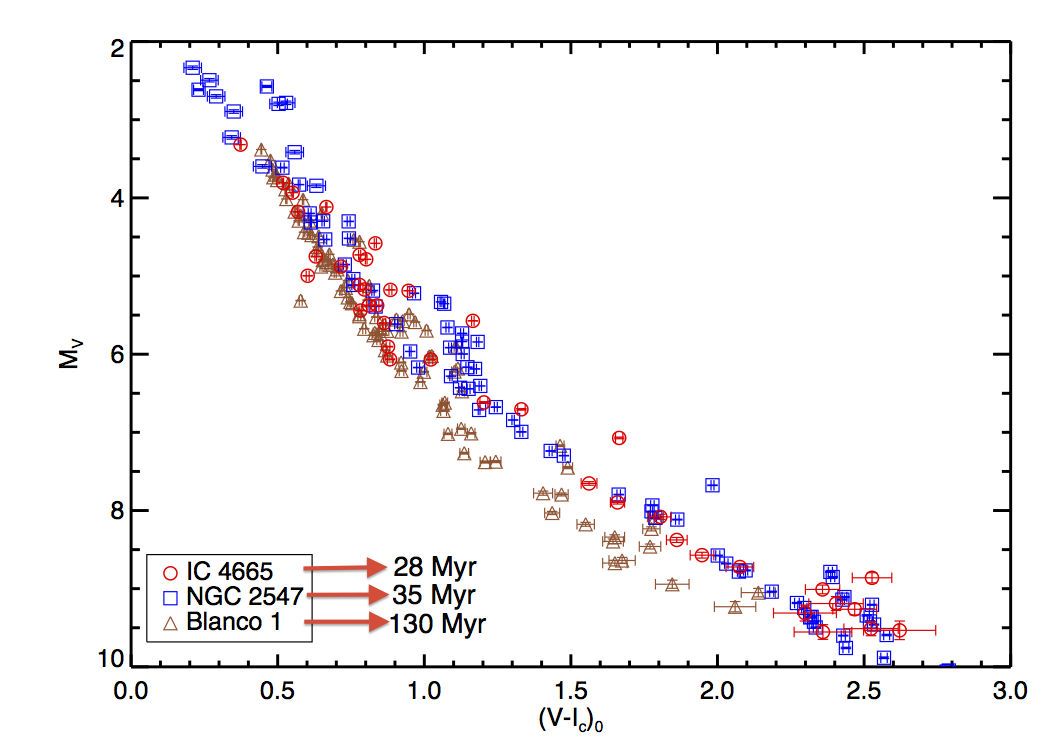

Stellar Isochrones:

Comparing the location of a star on a H-R diagram (or an empirical color-magnitude diagram) with stellar models. This technique is most effective when the observed properties of a star (e.g., its brightness or temperature) changes rapidly -- e.g., during its evolution onto or off of the main-sequence.

-

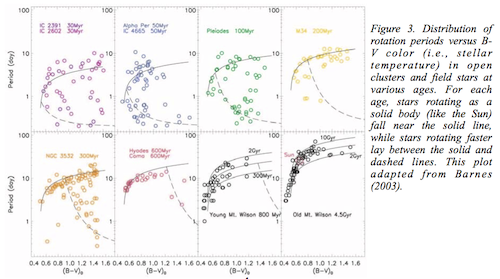

Rotation Rates (Gyrochronology):

Empirical and theoretical models recently have been put forward to explain the spin-down of solar-type star, through angular momentum loss due to magnatized winds. These rotation-age relationships can be used to determine the age of a star with a given rotation rate, regardless of its distance or other details of its enviroment (e.g., in a cluster or in the field).

-

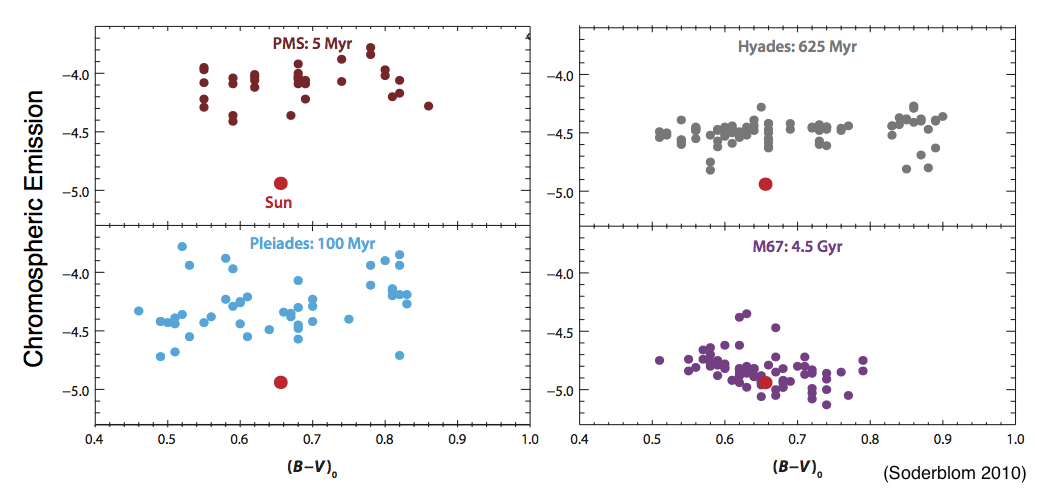

Stellar Activity:

Magnetic activity in stars, seen as chromospheric and coronal emission, has a strong age dependence due to the causal relationship between stellar age, rotation, and activity. Although a complete theoretical picture of this paradigm is still being formulated, empirical relationships between these activity signitures can be used to determine stellar ages.

-

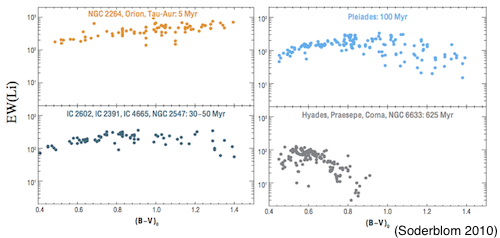

Lithium Abundances:

Lithium Abundances: Lithium, as one of the few metals created in the Big Bang, is present at a specific level in the material that stars form out of. Also, Li is easily destroy (fusion processes that turn Li in to He) at tempertures and presures typical in stellar interiors. We have empirically constrained the initial Li abundnce and the rate of its depletion, therefore, Li provides a indirect way to determining stellar ages.

Synthesizing the Spectrum of Stars

What is the best technique to measure stellar properties (temperature, size, composition)?

This is a question particularly important to astronomers finding and charaterizing planets around other stars. For every exoplanet discovered, its properties are fundamentally tied to our understanding of the parent star. Therefore, our understanding of the trends seen in the discovered exoplanets (e.g., more planets are found around stars that are richer in metals; Fischer & Valenti 2005) is only as good as the accuracy of our stellar characterization.

I am currently participating in a collaborative effort to provide better and more homogenious charaterization of exoplanet systems. The Homogeneous Characterization of Transiting Exoplanet Systems (HoSTS) research program will obtain new, high-resolution and signal-to-noise spectra for stars with known transiting planets, derive stellar properties using 3 different stellar characterization methods, and use an iterative combination of our new stellar properties with the best available radial velocity data and transit photometry to derive an acurate and homogenous set of well characterized exoplanets.

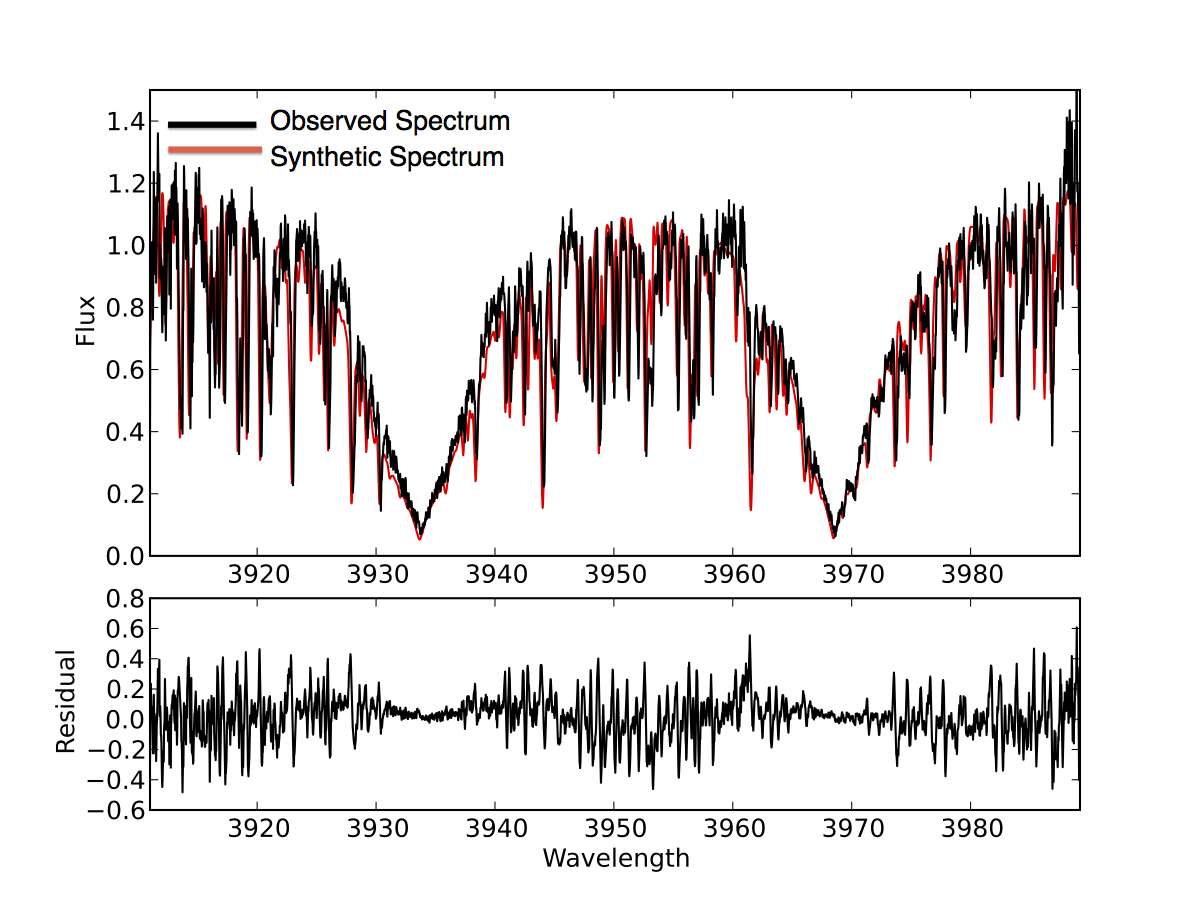

My part in this collaboration is to provide stellar parameters using software I developed which I candidly call Shazam for Stars. This software incorporates the stellar characterization code called Spectroscopy Made Easy (SME; Valenti & Piskunov 1996) into a large-scale Monte-Carlo analysis to derive precise stellar parameters. This code incorportates many statistical tools to derive accurate stellar parameters, including priors on parameters, search large and small scales in the parameter's chi-square space, investigate the dependence of the initial guess on the final best-fit parameters (a known problem with LM non-linear least-squares fitting routines), as well as being able to generate a large grid of synthetic spectra for MCMC type analysis. In a nutshell, my software works very similar to the song recognition software Shazam, the goal being you can easily input data (in our case a high-resolution spectrum) and the software will output all the information you would want (instead of song titles and artist, we derive for the star its effective temperature, composition, size information from its surface gravity, and projected rotation rate).

Astro-informatics & Software Development

Throughout all of the above research, I find that there is particular necessity for efficient and effective handling large amount of astronomical data. In fact, this is generally true for most all of modern astronomy and astrophysics. The advent of large-scale projects, such as the 2-Micron All-Sky Survey and the Sloan Digital Sky Survey, have revolutionized the way astronomers conduct research projects. Although these projects constitute ~15 terabytes of data, roughly the size of the entire information content found in the Library of Congress, they only hint at what is to come! The Large Synoptic Survey Telescope (LSST), scheduled to see first light in 2014, is estimated to produce > 30 terabytes of data per day as it repeatedly images the entire sky every few nights. The full 10 year survey will produce a 90+ petabyte dataset! This new data-intensive approach to astronomy will open new windows into large scale time-domain studies; however, also poses daunting data-management challenges for the astronomical and the broader science community. In preparation for this inundation of astronomical data, the LSST Corporation has assembled ten key Science Collaborations. These groups have the primary tasks of providing input on future design elements of LSST, developing preliminary studies, calibrations, simulations, and algorithm development that will be required before the survey is in full operational mode, establishing the full science capabilities of the LSST, and actively participating in the commissioning of the telescope.

I am currently a member of the Stellar Populaiton science group, where we are actively developing the methods to efficiently study stars observed by the LSST. My primary focus in this collaboration is looking into the possibility of measuring rotation periods from LSST light curves. This will allow us to determine the ages of millions of stars over a wide variety of ages, Galactic locations, and local environments. Thereby, we will be able to map out the star formation history of a large proportion of the Milky Way.